IQN¶

概述¶

IQN 是在 Implicit Quantile Networks for Distributional Reinforcement Learning 被提出的。 Distributional RL 的研究目标是通过建模值函数的概率分布,更全面地描述不同动作的预期奖励分布。 IQN (Implicit Quantile Network)和 QRDQN (Quantile Regression DQN) 之间的关键区别在于, IQN 引入了隐式量化网络(Implicit Quantile Network),它是一个确定性参数化函数,通过训练将来自基本分布(例如在U([0, 1])上的 tau )的样本重新参数化为目标分布的相应分位数值,而 QRDQN 直接学习了一组预定义的固定分位数。

要点摘要:¶

IQN 是一种 无模型(model-free) 和 基于值(value-based) 的强化学习算法。

IQN 仅支持 离散动作空间 。

IQN 是一种 异策略(off-policy) 算法。

通常情况下, IQN 使用 eps-greedy 或 多项式采样(multinomial sample) 进行探索。

IQN 可以与循环神经网络 (RNN) 结合使用。

关键方程¶

在隐式量化网络中,首先通过以下方式将采样的分位数tau编码为嵌入向量:

\[\phi_{j}(\tau):=\operatorname{ReLU}\left(\sum_{i=0}^{n-1} \cos (\pi i \tau) w_{i j}+b_{j}\right)\]

然后,分位数嵌入(quantile embedding)与环境观测的嵌入(embedding)进行逐元素相乘,并通过后续的全连接层将得到的乘积向量映射到相应的分位数值。

关键图¶

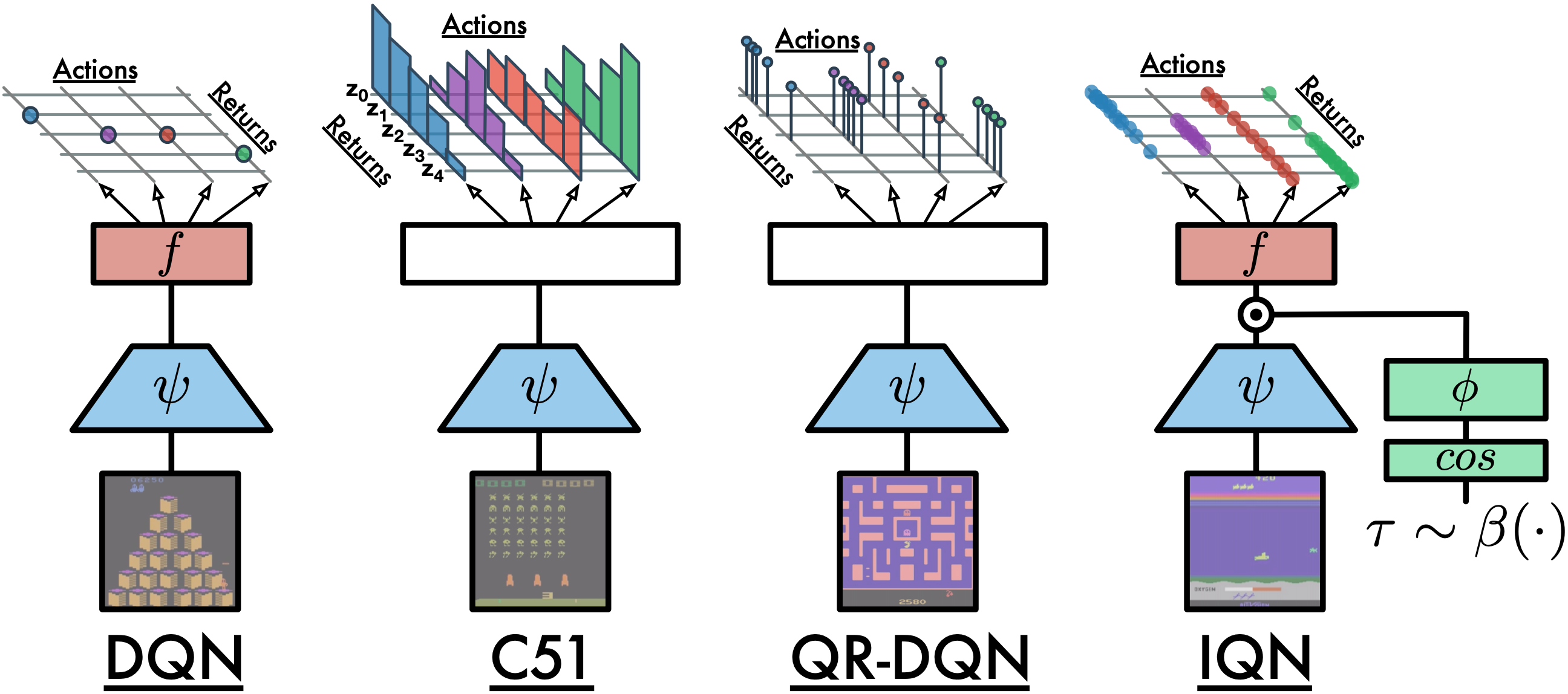

以下是DQN、C51、QRDQN和IQN之间的比较:

扩展¶

- IQN 可以与以下技术相结合使用:

优先经验回放 (Prioritized Experience Replay)

Tip

是否优先级经验回放 (PER) 能够提升 IQN 的性能取决于任务和训练策略。

多步时序差分 (TD) 损失

双目标网络 (Double Target Network)

循环神经网络 (RNN)

实现¶

Tip

我们的IQN基准结果使用与DQN相同的超参数,除了IQN的独有超参数, the number of quantiles, 它经验性地设置为32。不推荐将分位数的数量设置为大于64,因为这会带来较小的收益,并且会增加更多的前向传递延迟。

IQN算法的默认配置如下所示:

- class ding.policy.iqn.IQNPolicy(cfg: EasyDict, model: Module | None = None, enable_field: List[str] | None = None)[source]

- Overview:

Policy class of IQN algorithm. Paper link: https://arxiv.org/pdf/1806.06923.pdf. Distrbutional RL is a new direction of RL, which is more stable than the traditional RL algorithm. The core idea of distributional RL is to estimate the distribution of action value instead of the expectation. The difference between IQN and DQN is that IQN uses quantile regression to estimate the quantile value of the action distribution, while DQN uses the expectation of the action distribution.

- Config:

ID

Symbol

Type

Default Value

Description

Other(Shape)

1

typestr

qrdqn

RL policy register name, refer toregistryPOLICY_REGISTRYthis arg is optional,a placeholder2

cudabool

False

Whether to use cuda for networkthis arg can be diff-erent from modes3

on_policybool

False

Whether the RL algorithm is on-policyor off-policy4

prioritybool

True

Whether use priority(PER)priority sample,update priority6

other.eps.startfloat

0.05

Start value for epsilon decay. It’ssmall because rainbow use noisy net.7

other.eps.endfloat

0.05

End value for epsilon decay.8

discount_factorfloat

0.97, [0.95, 0.999]

Reward’s future discount factor, aka.gammamay be 1 when sparsereward env9

nstepint

3, [3, 5]

N-step reward discount sum for targetq_value estimation10

learn.updateper_collectint

3

How many updates(iterations) to trainafter collector’s one collection. Onlyvalid in serial trainingthis args can be varyfrom envs. Bigger valmeans more off-policy11

learn.kappafloat

/

Threshold of Huber loss

IQN算法使用的网络接口定义如下:

- class ding.model.template.q_learning.IQN(obs_shape: int | SequenceType, action_shape: int | SequenceType, encoder_hidden_size_list: SequenceType = [128, 128, 64], head_hidden_size: int | None = None, head_layer_num: int = 1, num_quantiles: int = 32, quantile_embedding_size: int = 128, activation: Module | None = ReLU(), norm_type: str | None = None)[source]

- Overview:

The neural network structure and computation graph of IQN, which combines distributional RL and DQN. You can refer to paper Implicit Quantile Networks for Distributional Reinforcement Learning https://arxiv.org/pdf/1806.06923.pdf for more details.

- Interfaces:

__init__,forward

- forward(x: Tensor) Dict[source]

- Overview:

Use encoded embedding tensor to predict IQN’s output. Parameter updates with IQN’s MLPs forward setup.

- Arguments:

- x (

torch.Tensor): The encoded embedding tensor with

(B, N=hidden_size).

- x (

- Returns:

- outputs (

Dict): Run with encoder and head. Return the result prediction dictionary.

- outputs (

- ReturnsKeys:

logit (

torch.Tensor): Logit tensor with same size as inputx.q (

torch.Tensor): Q valye tensor tensor of size(num_quantiles, N, B)quantiles (

torch.Tensor): quantiles tensor of size(quantile_embedding_size, 1)

- Shapes:

x (

torch.Tensor): \((B, N)\), where B is batch size and N is head_hidden_size.logit (

torch.FloatTensor): \((B, M)\), where M is action_shapequantiles (

torch.Tensor): \((P, 1)\), where P is quantile_embedding_size.

- Examples:

>>> model = IQN(64, 64) # arguments: 'obs_shape' and 'action_shape' >>> inputs = torch.randn(4, 64) >>> outputs = model(inputs) >>> assert isinstance(outputs, dict) >>> assert outputs['logit'].shape == torch.Size([4, 64]) >>> # default num_quantiles: int = 32 >>> assert outputs['q'].shape == torch.Size([32, 4, 64] >>> # default quantile_embedding_size: int = 128 >>> assert outputs['quantiles'].shape == torch.Size([128, 1])

IQN算法中使用的贝尔曼更新(Bellman update)在 iqn_nstep_td_error 函数中定义,我们可以在 ding/rl_utils/td.py 文件中找到它。

基准¶

environment |

best mean reward |

evaluation results |

config link |

comparison |

|---|---|---|---|---|

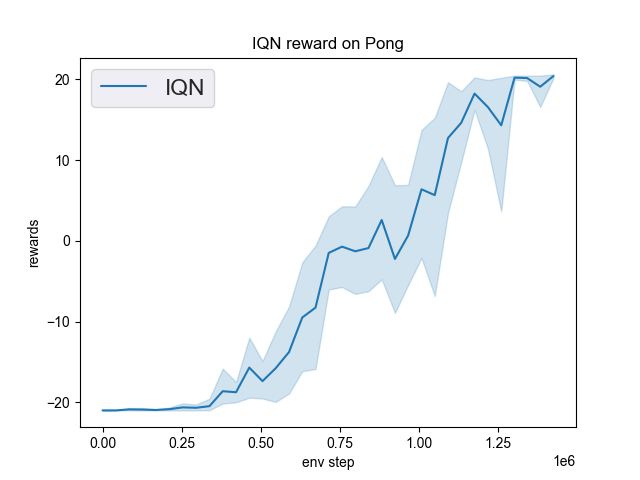

Pong (PongNoFrameskip-v4) |

20 |

|

Tianshou(20) |

|

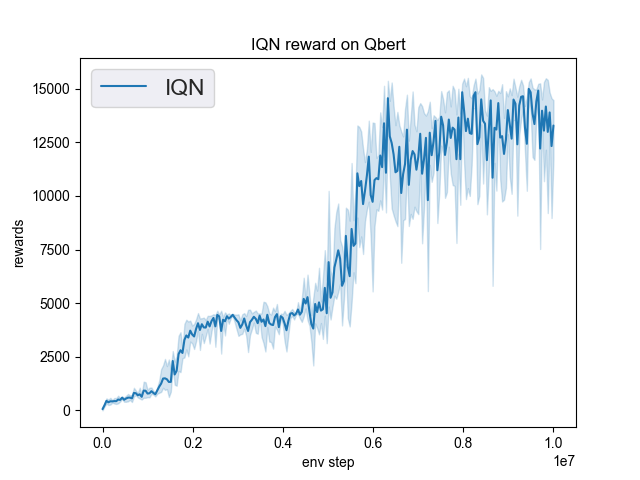

Qbert (QbertNoFrameskip-v4) |

16331 |

|

Tianshou(15520) |

|

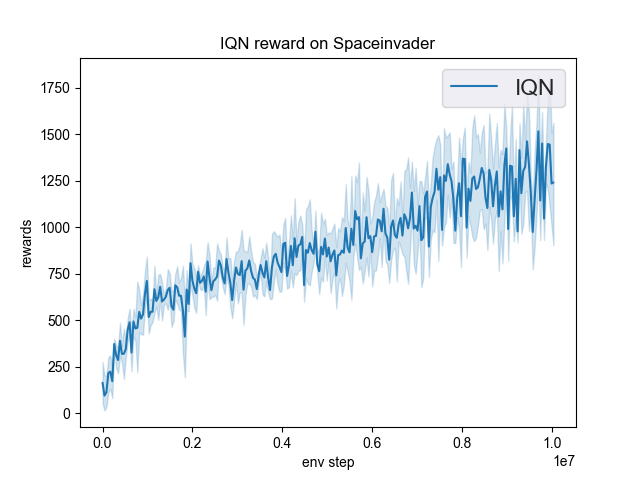

SpaceInvaders (SpaceInvadersNoFrame skip-v4) |

1493 |

|

Tianshou(1370) |

P.S.: 1. 上述结果是通过在五个不同的随机种子 (0, 1, 2, 3, 4)上运行相同的配置获得的。

参考文献¶

(IQN) Will Dabney, Georg Ostrovski, David Silver, Rémi Munos: “Implicit Quantile Networks for Distributional Reinforcement Learning”, 2018; arXiv:1806.06923. https://arxiv.org/pdf/1806.06923