TD3BC¶

Overview¶

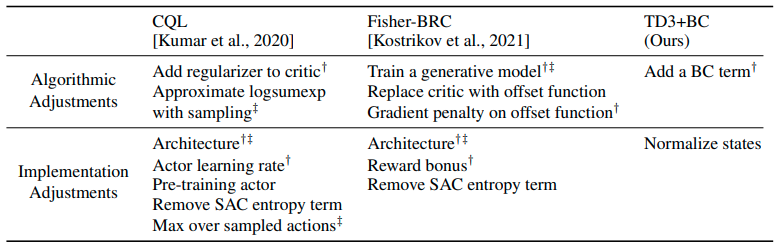

TD3BC, proposed in the 2021 paper A Minimalist Approach to Offline Reinforcement Learning, is a simple approach to offline RL where only two changes are made to TD3: a weighted behavior cloning loss is added to the policy update and the states are normalized. Unlike competing methods there are no changes to architecture or underlying hyperparameters. The resulting algorithm is a simple baseline that is easy to implement and tune, while more than halving the overall run time by removing the additional computational overhead of previous methods.

Implementation changes offline RL algorithms make to the underlying base RL algorithm. † corresponds to details that add additional hyperparameter(s), and ‡ corresponds to ones that add a computational cost. Ref¶

Quick Facts¶

TD3BC is an offline RL algorithm.

TD3BC is based on TD3 and behavior cloning.

Key Equations or Key Graphs¶

TD3BC simply consists to add a behavior cloning term to TD3 in order to regularize the policy:

\((\pi(s)-a)^2\) is the behavior cloning term acts as a regularizer and aims to push the policy towards favoring actions contained in the dataset. The hyperparameter \(\lambda\) is used to control the strength of the regularizer.

Assuming an action range of [−1, 1], the BC term is at most 4, however the range of Q will be a function of the scale of the reward. Consequently, the scalar \(\lambda\) can be defined as:

which is simply a normalization term based on the average absolute value of Q over mini-batches. This formulation has also the benefit of normalizing the learning rate across tasks since it is dependent on the scale of Q. The default value for \(\alpha\) is 2.5.

Additionally, all the states in each mini-batch are normalized, such that they have mean 0 and standard deviation 1. This normalization improves the stability of the learned policy.

Implementations¶

The default config is defined as follows:

- class ding.policy.td3_bc.TD3BCPolicy(cfg: EasyDict, model: Module | None = None, enable_field: List[str] | None = None)[source]¶

- Overview:

Policy class of TD3_BC algorithm.

Since DDPG and TD3 share many common things, we can easily derive this TD3_BC class from DDPG class by changing

_actor_update_freq,_twin_criticand noise in model wrapper.- Property:

learn_mode, collect_mode, eval_mode

Config:

ID

Symbol

Type

Default Value

Description

Other(Shape)

1

typestr

td3_bc

RL policy register name, referto registryPOLICY_REGISTRYthis arg is optional,a placeholder2

cudabool

True

Whether to use cuda for network3

random_collect_sizeint

25000

Number of randomly collectedtraining samples in replaybuffer when training starts.Default to 25000 forDDPG/TD3, 10000 forsac.4

model.twin_criticbool

True

Whether to use two criticnetworks or only one.Default True for TD3,Clipped DoubleQ-learning method inTD3 paper.5

learn.learning_rate_actorfloat

1e-3

Learning rate for actornetwork(aka. policy).6

learn.learning_rate_criticfloat

1e-3

Learning rates for criticnetwork (aka. Q-network).7

learn.actor_update_freqint

2

When critic network updatesonce, how many times will actornetwork update.Default 2 for TD3, 1for DDPG. DelayedPolicy Updates methodin TD3 paper.8

learn.noisebool

True

Whether to add noise on targetnetwork’s action.Default True for TD3,False for DDPG.Target Policy Smoo-thing Regularizationin TD3 paper.9

learn.noise_rangedict

dict(min=-0.5,max=0.5,)Limit for range of targetpolicy smoothing noise,aka. noise_clip.10

learn.-ignore_donebool

False

Determine whether to ignoredone flag.Use ignore_done onlyin halfcheetah env.11

learn.-target_thetafloat

0.005

Used for soft update of thetarget network.aka. Interpolationfactor in polyak averaging for targetnetworks.12

collect.-noise_sigmafloat

0.1

Used for add noise during co-llection, through controllingthe sigma of distributionSample noise from distribution, Ornstein-Uhlenbeck process inDDPG paper, Guassianprocess in ours.

Model¶

Here we provide examples of ContinuousQAC model as default model for TD3BC.

- class ding.model.ContinuousQAC(obs_shape: int | SequenceType, action_shape: int | SequenceType | EasyDict, action_space: str, twin_critic: bool = False, actor_head_hidden_size: int = 64, actor_head_layer_num: int = 1, critic_head_hidden_size: int = 64, critic_head_layer_num: int = 1, activation: Module | None = ReLU(), norm_type: str | None = None, encoder_hidden_size_list: SequenceType | None = None, share_encoder: bool | None = False)[source]

- Overview:

The neural network and computation graph of algorithms related to Q-value Actor-Critic (QAC), such as DDPG/TD3/SAC. This model now supports continuous and hybrid action space. The ContinuousQAC is composed of four parts:

actor_encoder,critic_encoder,actor_headandcritic_head. Encoders are used to extract the feature from various observation. Heads are used to predict corresponding Q-value or action logit. In high-dimensional observation space like 2D image, we often use a shared encoder for bothactor_encoderandcritic_encoder. In low-dimensional observation space like 1D vector, we often use different encoders.- Interfaces:

__init__,forward,compute_actor,compute_critic

- compute_actor(obs: Tensor) Dict[str, Tensor | Dict[str, Tensor]][source]

- Overview:

QAC forward computation graph for actor part, input observation tensor to predict action or action logit.

- Arguments:

x (

torch.Tensor): The input observation tensor data.

- Returns:

outputs (

Dict[str, Union[torch.Tensor, Dict[str, torch.Tensor]]]): Actor output dict varying from action_space:regression,reparameterization,hybrid.

- ReturnsKeys (regression):

action (

torch.Tensor): Continuous action with same size asaction_shape, usually in DDPG/TD3.

- ReturnsKeys (reparameterization):

logit (

Dict[str, torch.Tensor]): The predictd reparameterization action logit, usually in SAC. It is a list containing two tensors:muandsigma. The former is the mean of the gaussian distribution, the latter is the standard deviation of the gaussian distribution.

- ReturnsKeys (hybrid):

logit (

torch.Tensor): The predicted discrete action type logit, it will be the same dimension asaction_type_shape, i.e., all the possible discrete action types.action_args (

torch.Tensor): Continuous action arguments with same size asaction_args_shape.

- Shapes:

obs (

torch.Tensor): \((B, N0)\), B is batch size and N0 corresponds toobs_shape.action (

torch.Tensor): \((B, N1)\), B is batch size and N1 corresponds toaction_shape.logit.mu (

torch.Tensor): \((B, N1)\), B is batch size and N1 corresponds toaction_shape.logit.sigma (

torch.Tensor): \((B, N1)\), B is batch size.logit (

torch.Tensor): \((B, N2)\), B is batch size and N2 corresponds toaction_shape.action_type_shape.action_args (

torch.Tensor): \((B, N3)\), B is batch size and N3 corresponds toaction_shape.action_args_shape.

- Examples:

>>> # Regression mode >>> model = ContinuousQAC(64, 6, 'regression') >>> obs = torch.randn(4, 64) >>> actor_outputs = model(obs,'compute_actor') >>> assert actor_outputs['action'].shape == torch.Size([4, 6]) >>> # Reparameterization Mode >>> model = ContinuousQAC(64, 6, 'reparameterization') >>> obs = torch.randn(4, 64) >>> actor_outputs = model(obs,'compute_actor') >>> assert actor_outputs['logit'][0].shape == torch.Size([4, 6]) # mu >>> actor_outputs['logit'][1].shape == torch.Size([4, 6]) # sigma

- compute_critic(inputs: Dict[str, Tensor]) Dict[str, Tensor][source]

- Overview:

QAC forward computation graph for critic part, input observation and action tensor to predict Q-value.

- Arguments:

inputs (

Dict[str, torch.Tensor]): The dict of input data, includingobsandactiontensor, also containslogitandaction_argstensor in hybrid action_space.

- ArgumentsKeys:

obs: (

torch.Tensor): Observation tensor data, now supports a batch of 1-dim vector data.action (

Union[torch.Tensor, Dict]): Continuous action with same size asaction_shape.logit (

torch.Tensor): Discrete action logit, only in hybrid action_space.action_args (

torch.Tensor): Continuous action arguments, only in hybrid action_space.

- Returns:

outputs (

Dict[str, torch.Tensor]): The output dict of QAC’s forward computation graph for critic, includingq_value.

- ReturnKeys:

q_value (

torch.Tensor): Q value tensor with same size as batch size.

- Shapes:

obs (

torch.Tensor): \((B, N1)\), where B is batch size and N1 isobs_shape.logit (

torch.Tensor): \((B, N2)\), B is batch size and N2 corresponds toaction_shape.action_type_shape.action_args (

torch.Tensor): \((B, N3)\), B is batch size and N3 corresponds toaction_shape.action_args_shape.action (

torch.Tensor): \((B, N4)\), where B is batch size and N4 isaction_shape.q_value (

torch.Tensor): \((B, )\), where B is batch size.

- Examples:

>>> inputs = {'obs': torch.randn(4, 8), 'action': torch.randn(4, 1)} >>> model = ContinuousQAC(obs_shape=(8, ),action_shape=1, action_space='regression') >>> assert model(inputs, mode='compute_critic')['q_value'].shape == (4, ) # q value

- forward(inputs: Tensor | Dict[str, Tensor], mode: str) Dict[str, Tensor][source]

- Overview:

QAC forward computation graph, input observation tensor to predict Q-value or action logit. Different

modewill forward with different network modules to get different outputs and save computation.- Arguments:

inputs (

Union[torch.Tensor, Dict[str, torch.Tensor]]): The input data for forward computation graph, forcompute_actor, it is the observation tensor, forcompute_critic, it is the dict data including obs and action tensor.mode (

str): The forward mode, all the modes are defined in the beginning of this class.

- Returns:

output (

Dict[str, torch.Tensor]): The output dict of QAC forward computation graph, whose key-values vary in different forward modes.

- Examples (Actor):

>>> # Regression mode >>> model = ContinuousQAC(64, 6, 'regression') >>> obs = torch.randn(4, 64) >>> actor_outputs = model(obs,'compute_actor') >>> assert actor_outputs['action'].shape == torch.Size([4, 6]) >>> # Reparameterization Mode >>> model = ContinuousQAC(64, 6, 'reparameterization') >>> obs = torch.randn(4, 64) >>> actor_outputs = model(obs,'compute_actor') >>> assert actor_outputs['logit'][0].shape == torch.Size([4, 6]) # mu >>> actor_outputs['logit'][1].shape == torch.Size([4, 6]) # sigma

- Examples (Critic):

>>> inputs = {'obs': torch.randn(4, 8), 'action': torch.randn(4, 1)} >>> model = ContinuousQAC(obs_shape=(8, ),action_shape=1, action_space='regression') >>> assert model(inputs, mode='compute_critic')['q_value'].shape == (4, ) # q value

Benchmark¶

environment |

best mean reward |

evaluation results |

config link |

comparison |

|---|---|---|---|---|

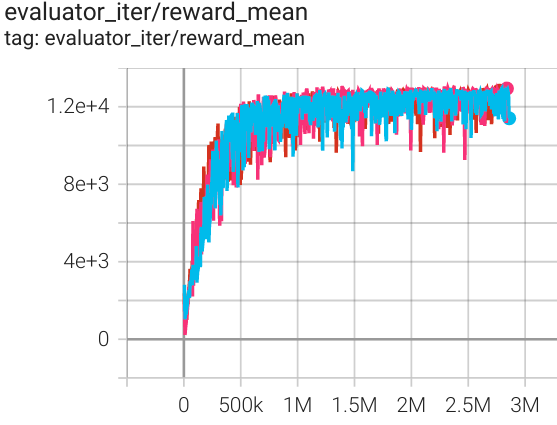

Halfcheetah (Medium Expert) |

13037 |

|

d3rlpy(12124) |

|

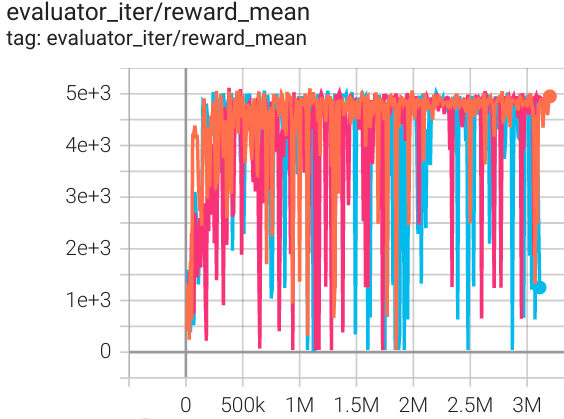

Walker2d (Medium Expert) |

5066 |

|

d3rlpy(5108) |

|

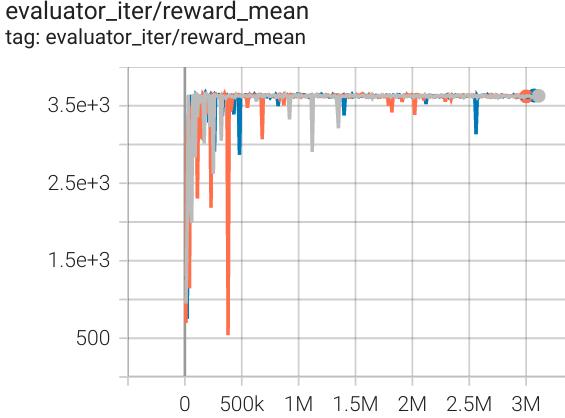

Hopper (Medium Expert) |

3653 |

|

d3rlpy(3690) |

environment |

random |

medium replay |

medium expert |

medium |

expert |

|---|---|---|---|---|---|

Halfcheetah |

1592 |

5192 |

13037 |

5257 |

13247 |

Walker2d |

345 |

1724 |

3653 |

3268 |

3664 |

Hopper |

985 |

2317 |

5066 |

3826 |

5232 |

Note: the D4RL environment used in this benchmark can be found here.

References¶

Scott Fujimoto, Shixiang Shane Gu: “A Minimalist Approach to Offline Reinforcement Learning”, 2021; [https://arxiv.org/abs/2106.06860 arXiv:2106.06860].

Scott Fujimoto, Herke van Hoof, David Meger: “Addressing Function Approximation Error in Actor-Critic Methods”, 2018; [http://arxiv.org/abs/1802.09477 arXiv:1802.09477].